RESOURCES | post

Author: DaveS

A process simulation or model can be as simple as algebraic calculations in an Excel sheet to complex electrolyte thermodynamic calculations as we encounter in our OLI Studio and Flowsheet tools. However, all simulators fight against the same fate: model disuse. Once a model is created, it is as good as the person who runs it and extracts value from the results. In general terms, when this person provides value to the company and is promoted, there really is no incentive to transfer this knowledge from the individual moving on. This is expected as the individual starts focusing on the new role and is natural of all of us. The person inheriting the model, then, having not been trained losses interest or confidence in the model, thus the model gets lost and, when it is time to renew the license, there is little appetite from the new user to do so.

The software industry understands that to combat this “model abandonment” pattern, they need to remove the repetitive, and complex, task of running simulations ad-hoc from the equation. Therefore, having a model that runs continuously as it is fed data from the historian on a predetermined frequency, without user interaction or need to “press run,” is paramount to this effort. From this realization, the digital twin is born. The digital twin will allow operations engineers to easily extract value from the models in a way that it is easy to consume and understand for those who make day-to-day decisions, without the need to spend time running and maintaining the models.

What is a digital twin?

There is no consensus in the industry of what a digital twin is. In fact, different companies (both consumers and providers), have different definitions of what a digital twin is. At the core, however, a digital twin must provide a few basic functionalities to be useful.

First, a digital twin must be a mathematical representation of a process. This process can be as simple as representing a machine to monitor and predicting its performance (for example, prediction of vibrational issues in a compressor), or a plant-wide flowsheet to track operational performance. At the core, this model needs to be able to consume data directly from a historian, take this data and run calculations at a pre-determined frequency. The run frequency depends on the process and the monitoring that a user wishes to perform. Fouling, scaling and corrosion, frequency could be once a day, or once a week. Operational optimization (production rate and performance against specification) would probably need to be run every 15 minutes to an hour. It is in those pockets where the money is made. Thus, the pillars of a digital twin are:

- A mathematical representation of a unit or plant (model/simulation)

- The ability to read data from a historian

- The ability to run at a predetermined frequency without the need of human intervention

- Snapshot in time of optimum run conditions and compares against real asset’s performance

- Indication of deviation from optimum operations (a delta from optimum)

- Prescriptive insights on how to minimize or eliminate this delta

Therefore, I see two different applications for digital twins: Operational optimization and reliability monitoring. The drivers for each are very different, but they end up providing the same benefit of increased profitability.

Operational optimization allows the plant to compare itself to an optimum for every data point. For example, if my refinery is pushing for naphtha during the summer months, at the various parameters that I can fix, the model tells me I should make X bbls/h of naphtha. However, as I am recording my product flow rate, I realize my plant is making {X-delta} bbls/h of naphtha, where my delta is how many barrels of naphtha I’m missing to produce. While this is happening, the digital twin is comparing all the available parameters in the model to their real-life counterparts. Every 15 minutes to an hour, then, the digital twin provides a report that tells me which parameters I need to move in my plant (that have a digital counterpart in the model) and to what value in order to make that delta smaller and approach the maximum number of barrels of naphtha my plant can achieve.

Reliability monitoring allows a plant to run for the scheduled on-stream time planned for the year. If a plant has an onstream time of 95%, then it plans to operate at full capacity for 347 days out of the year, and thus budgets, targets and growth goals are set against this availability. Any issues that prevent the plant to operate outside of the on-stream target will eat away at profits. Therefore, allowing the plant to reach its on-stream factor is crucial for upper management to reach their goals, as their bonuses are tied to the plant’s performance and profitability growth. In addition, any projects that allow a plant to increase its on-stream factor for the year will add profitability to the bottom line.

The reliability digital twin is a bit different from the operational optimization digital twin in that in may not need to calculate performance, but certain operational factors that indicate asset reliability (a simple one would be heat transfer coefficients in a heat exchanger). For example, a well-known midstream company “packaged” all the sensor data from a compressor station on a single dashboard and called this a digital representation of their compressor. Then, the company “cloned” this package and applied it to all the compressor stations along the pipeline and called it a digital twin. The package would monitor for throughput, vibration, dP, etc. and alert the operators when one of these would change and provide a report with insights into what may fix the issue.

At the core of a digital twin, then, is its ability to gather data from operations and compare the calculated results against the real asset, while running models or monitoring in real-time (where real-time is defined by the process the digital twin is applied to). The other part of the digital twin is the prescriptive reporting capabilities. It is not enough to say the value today is X, the value you are at is X-Y and trend it. The digital twin must be intelligent enough to tell the customer what “knobs” to turn to minimize or eliminate Y. This prescriptive report can be consumed via dashboards, emails, text messages, app notifications, etc. The point is that the information must be provided with prescriptive insights on how to reduce or eliminate the delta.

Of major importance, to this effort, is then the ability of the model to use the input data from the historian and converge within the prescribed frequency. If my digital twin operation calls for reports every 15 minutes, then my model needs to converge within that time frame, otherwise digital twin insights availability frequency is limited by the time it takes the model to converge – and this could have a real “dollars and cents” implication.

Finally, the models that are the foundation to the digital twin need to be robust. I had a mentor that hated this word and would say “what do you mean robust? If I throw it into a lake, will it come out swimming?” However, by robust I mean that the model not only needs to run quickly, but also converge every time for small changes. A model that is the basis of a digital twin needs to be flexible enough to accept variations within a reasonable range on the inputs and still converge. What this range is depends on the process simulated and software ecosystem the digital twin lives in. however, it is typical for plants to push rates up to 10% of design load or run at lower capacity due to demand. Care should be taken when developing a digital twin for a particular process, unit or plant that keeps planning for future operating changes in mind, especially when plants try to capture windows of opportunity. However, the digital twin is not (and cannot be) expected to converge with input data from a shutdown or a drastic reduction in operational capacity but should be able to start converging (and give insights!) as operations start approaching normalcy.

What is NOT a digital twin

Because there is no real definition of a what a digital twin is, it is difficult to say what it is not. However, there is a trend that shows what companies are not willing to allow their digital twins to do yet, and that is to apply the prescriptive changes themselves. This would be what we would call a “closed-loop” digital twin, where the prescriptive insights are tied to the DCS system and a change is made to bring the Asset’s operations to the optimized level of the digital twin that runs concurrently with the asset.

In addition, a digital twin is not a planning tool used to predict future operations. The digital twin looks at the present operations to give insight into how operations can get back to a current optimum. Planning tools such as LPs, plant-wide models, etc., can be used for that work. Note that the model/simulation used in the digital twin can be the same tool used to predict future operations on changing feedstocks, operations, winter vs. summer conditions, etc. This model/simulation can live on the cloud or accessed through a desktop application. The difference is that it is not automated, set to run at a specific frequency or to provide prescriptive reports on how to achieve an optimum.

What the Digital Twin enables

At the most basic level, the digital twin enables the breaking of silos within a business, improving collaboration, increasing efficiency, and driving up profitability. Currently, for plants that do not operate under a digital twin philosophy, the business units that compose these plants are “siloed,” having visibility only of their goals and targets. As these units’ products become feeds to other units, it is now clear that business decisions to push profitability may not be the right decision for the unit that takes the products as feed.

For example, increasing the charge of a Coker unit using purchased feed to increase production of Coker Naphtha may make sense from a unit-specific point of view, and the naphtha hydrotreater may have capacity to take it, giving the sense that this move will upscale the purchased feed and increase the refinery’s profitability. However, the Coker Operations Leadership may not have visibility on the availability and quality of hydrogen needed to treat the additional coker naphtha created, or that the crude unit will have to back out of naphtha production and therefore change its diet, or the naphtha may be off-spec and more alkylate or reformate may be needed to increase the octane. Additionally, if this feed contains chlorides this naphtha may cause fouling and corrosion at the naphtha hydrotreater causing a shutdown that may cost more than the benefit of running the purchased feed.

Therefore, an active, operating digital twin provides full-plant visibility to all involved parties by sharing real-time information to all the units of the ripple effects one business unit may make on the entire plant. This allows for collaboration as decisions are democratized. Operational decisions will now be available and visible to all the units, breaking the information barriers and removing silos, and results are accounted for at the plant level.

In addition, the digital twin does not have to be limited to a single plant, but it can represent an entire business enterprise. As companies with multiple assets (plants) generate products that are both direct to consumers and feeds to other assets (plants), the digital twin allows for the optimization of the entire enterprise. A business enterprise that has a direct line of communication between all its assets (information and data sharing, visibility on business decisions) is now able to reduce inefficiencies and increase profits across the network of assets. This means that assets’ product streams can be optimized at a “global” level for plants that generate products that become feeds to other plants or that are sold in the same markets.

A digital twin, then, can become the connective tissue that drives a business to increased operational excellence, efficiency, and profitability. It enables increased collaboration and visibility of business decisions and shows leadership how these decisions affect every part of the business. More importantly, by democratizing information and results, the digital twin enables a business to act as an organism, where every part of the business acts and reacts towards a common goal: Increased profits.

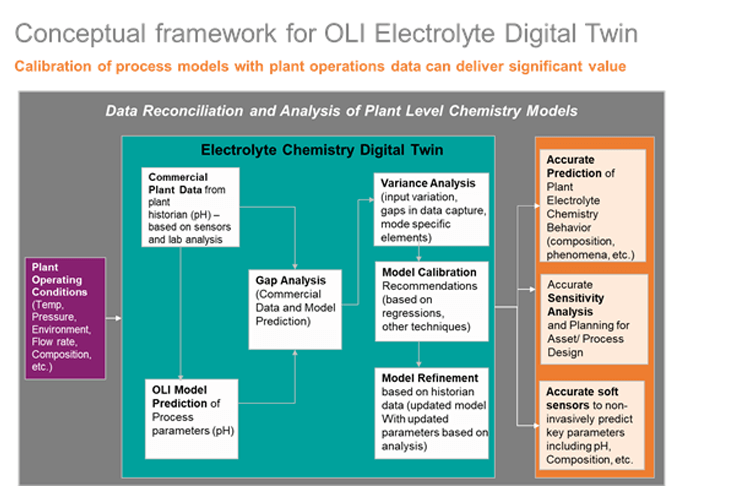

Below is an example of a Digital Twin Framework that illustrates the concepts reviewed above. We will discuss this in more detail in upcoming blogs on Digital Twins and Digital Transformation.

For more information on OLI’s digital twins and other products and solutions, contact sales@olisystems.com.